There is a major question to be addressed here. Why are we separating training and prediction to begin with?

It is absolutely true that in the context of machine learning examples and courses, where all the data is known in advance (including the data to be predicted), a very simple way to build the predictor is to stack training and prediction data (usually called a test set).

Then, it is necessary to train on the “training set” and predict on the “test set” to get the results, while at the same time doing feature engineering on both train and test data, training and predicting in the same and unique pipeline.

However, in real life systems, you usually have training data, and the data to be predicted comes in just as it is being processed. In other words, you watch the movie at one time and you have some questions about it later on, which means answers should be easy and fast.

Moreover, it is usually not necessary to re-train the entire model each time new data comes in since training takes time (could be weeks for some image sets) and should be stable enough over time.

That is why training and predicting can be, or even should be, clearly separated on many systems, and this is also better reflecting how an intelligent system (artificial or not) learns.

The Connection With Overfitting

The separation of training and prediction is also a good way to address the overfitting problem.

In statistics, overfitting is “the production of an analysis that corresponds too closely or exactly to a particular set of data, and may, therefore, fail to fit additional data or predict future observations reliably”.

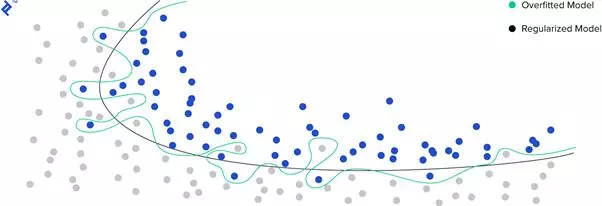

The green line represents an overfitted model and the black line represents a regularized model. While the green line best follows the training data, it is too dependent on that data and it is likely to have a higher error rate on new unseen data, compared to the black line._

Overfitting is particularly seen in datasets with many features, or with datasets with limited training data. In both cases, the data has too much information compared to what can be validated by the predictor, and some of them might not even be linked to the predicted variable. In this case, noise itself could be interpreted as a signal.

A good way of controlling overfitting is to train on part of the data and predict on another part on which we have the ground truth. Therefore the expected error on new data is roughly the measured error on that dataset, provided the data we train on is representative of the reality of the system and its future states.

So if we design a proper training and prediction pipeline together with a correct split of data, not only we address the overfitting problem but also we can reuse that architecture for predicting on new data.

The last step would be controlling that the error on new data is the same as expected. There is always a shift (the actual error is always below the expected one), and one should determine what is an acceptable shift—but that’s not the topic of this article.