Introduction 🌍💡

In today’s fast-paced digital world, businesses rely on cloud-native applications for scalability, flexibility, and efficiency. Managing these applications across distributed environments can be challenging. This is where Kubernetes steps in—an open-source platform designed to automate the deployment, scaling, and operation of containerized applications. Originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes has become the industry standard for orchestrating containers.

This article explores what Kubernetes is, how it works, and why it is essential for managing cloud-native applications.

What is Kubernetes? 🤖📦

Kubernetes, often abbreviated as K8s, is a container orchestration platform that automates the deployment, scaling, and management of applications. It works by grouping containers into pods and running them on clusters of physical or virtual machines. Kubernetes ensures that applications run consistently, efficiently, and reliably, even as demand fluctuates.

Key Features of Kubernetes:

- Automated Scaling: Adjusts the number of application instances based on demand.

- Self-Healing: Restarts failed containers and replaces unresponsive nodes.

- Load Balancing: Distributes network traffic to maintain performance.

- Declarative Configuration: Uses YAML files to define desired application states.

- Multi-Cloud Support: Runs on any cloud platform, including AWS, Azure, and Google Cloud.

How Kubernetes Works 🧠⚙️

Kubernetes operates using a cluster-based architecture that includes several key components:

- Cluster: A group of machines (nodes) that run Kubernetes and host applications.

- Node: Each machine in the cluster, responsible for running containers.

- Pod: The smallest deployable unit, containing one or more containers that share resources.

- Control Plane: Manages the cluster, including scheduling, monitoring, and maintaining the desired state.

- Kubelet: An agent running on each node that ensures containers are running correctly.

- Service: Provides a stable IP address and DNS name for accessing applications, regardless of where they run.

Kubernetes uses YAML configuration files to define the desired state of applications. The control plane continuously monitors the cluster and adjusts resources to match the specified configuration, ensuring that applications remain available and performant.

Benefits of Using Kubernetes for Cloud-Native Apps ☁️💡

✅ 1. Scalability and Flexibility

Kubernetes automatically scales applications based on demand, ensuring optimal performance without manual intervention. Horizontal scaling adds more instances of an application, while vertical scaling increases the resources allocated to existing instances.

💪 2. High Availability and Reliability

Kubernetes ensures that applications remain available even if individual containers or nodes fail. The platform continuously monitors the health of containers and replaces any that fail, providing built-in self-healing capabilities.

🧩 3. Simplified Deployment and Management

Kubernetes uses declarative configuration files to define application states, making deployment predictable and repeatable. Engineers can update applications without downtime using rolling updates or revert to previous versions using rollbacks.

🌎 4. Portability Across Cloud Platforms

Kubernetes is cloud-agnostic, meaning it can run on any cloud platform or on-premises infrastructure. This flexibility allows businesses to avoid vendor lock-in and choose the best environment for their needs.

🗂️ 5. Efficient Resource Utilization

Kubernetes optimizes resource usage by distributing workloads across available nodes, ensuring that no machine is overburdened. Resource limits and quotas prevent applications from consuming excessive CPU or memory.

🔒 6. Enhanced Security and Compliance

Kubernetes provides robust security features, including role-based access control (RBAC), network policies, and secret management. These features help protect sensitive data and ensure compliance with industry regulations.

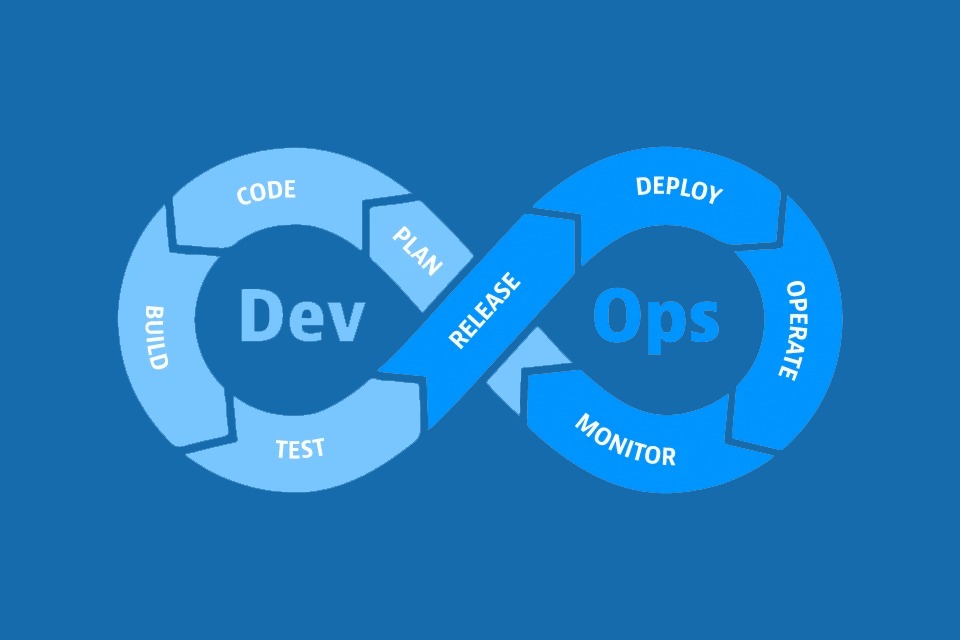

📈 7. Automation and CI/CD Integration

Kubernetes integrates seamlessly with Continuous Integration/Continuous Deployment (CI/CD) pipelines, enabling automated testing, deployment, and scaling of applications. Tools like Jenkins, GitLab CI/CD, and ArgoCD help streamline development workflows.

Use Cases of Kubernetes in Cloud-Native Applications 🌐🚀

- Microservices Architecture: Kubernetes simplifies the deployment and management of microservices, ensuring that each service runs independently and scales as needed.

- DevOps and CI/CD Pipelines: Automates application deployment and scaling, reducing manual effort and accelerating release cycles.

- Multi-Cloud and Hybrid Cloud Deployments: Enables businesses to deploy applications across multiple cloud providers, ensuring redundancy and minimizing downtime.

- AI and Machine Learning: Provides the infrastructure needed to run complex machine learning models and process large datasets efficiently.

- Edge Computing: Extends Kubernetes to edge devices, supporting applications that require low latency and real-time processing.

Popular Kubernetes Tools and Ecosystem 🧩💻

- Docker: The most widely used container platform, often combined with Kubernetes.

- Helm: A package manager for Kubernetes, simplifying application deployment and management.

- Prometheus: A monitoring system that tracks application performance and health.

- Grafana: A visualization tool that creates dashboards for monitoring Kubernetes clusters.

- Istio: A service mesh that enhances communication, security, and observability between microservices.

Challenges of Using Kubernetes 🧩⚠️

- Complexity: Kubernetes has a steep learning curve and requires expertise to configure and manage.

- Resource Overhead: Running Kubernetes clusters consumes additional resources, which may be costly for small-scale applications.

- Security Risks: Misconfigurations can lead to security vulnerabilities if not properly managed.

- Maintenance and Monitoring: Continuous monitoring and maintenance are essential to ensure optimal performance and availability.

Future Trends in Kubernetes and Cloud-Native Applications 🔮🌱

- Serverless Kubernetes: Combining Kubernetes with serverless frameworks to reduce operational complexity and costs.

- AI-Driven Automation: Using AI to optimize resource allocation, scaling, and fault recovery.

- Edge Computing Expansion: Deploying Kubernetes clusters closer to end-users for lower latency and improved performance.

- Zero-Trust Security: Enhancing security with zero-trust principles, ensuring strict access controls and data protection.

- Green Kubernetes: Reducing energy consumption and carbon footprint through efficient resource management.

Conclusion ✅🚀

Kubernetes has transformed the way businesses manage cloud-native applications, offering scalability, reliability, and flexibility across diverse environments. By automating deployment, scaling, and resource management, Kubernetes empowers developers to focus on building innovative applications while ensuring optimal performance and availability. As cloud-native technologies continue to evolve, Kubernetes will remain a cornerstone of modern application development, driving efficiency, agility, and innovation in the digital era.